|

Scientist+Engineer interested in understanding and maximizing the perceived quality of visual experiances. I currently work on designing 📸 Algorithms at Apple . I did my PhD under the mentorship of Piotr Didyk, working towards realizing the dream of real-time AR/VR that is visually indistinguishable from the real world. I have also worked with the Applied Vision Science and Display Systems Research teams at Meta on real-time perceptually optimized computational display algorithms for AR/VR. During my Master's, I was a research fellow at KAIST, investigating how image/video enhancement neural networks understand visual quality, and how we can teach them to perceive it the same way humans do. CV / Google Scholar / Linkedin / Twitter / |

|

|

My primary interests lie in the intersection of human vision science and real-time imaging. I work on understanding, quantifying and maximizing perceptual realism, accurate reproduction, and quality (with constituents like spatial quality, dynamic range, depth, motion and color) for real-time image/video capture (computational photography), synthesis (rendering/graphics) and display (computational display). The long term goals I aim to push towards are:

|

|

|

|

Representative projects are highlighted, a few that I consider my most original and fundemental ideas. |

|

|

A step towards practical and efficient real-time deep learning based image/video enhancement by leveraging the limitations of human vision. We design a perceptual model and pipeline to control the local quality and deep learning resources for image/video super resolution, such that there is no visible loss in quality, even with a very significant reduction in computational cost and runtime. The framework is general and can be applied to any deep learning based super resolution achitecture. The framework can also be applied for efficient deep denoising, frame-interpolation, and possibly generative image/video synthesis without noticeable quality loss. ICCV 2025 - Human Vision Inspired Computer Vision Workshop |

|

|

Understanding stereoscopic depth perception in Foveated AR/VR. We derive a perceptual model which demonstrates that the blur intensities applied in common foveation procedures do not affect stereoacuity. Additionally, our findings suggest that it is important to maintain high depth quality even in strongly foveated stereoscopic content. SIGGRAPH 2025 |

|

|

We demonstrate that foveated rendering may inhibit motion perception, making AR/VR appear slower than it physically is. We propose the theory of Motion Metamers of human vision; videos that are structurally different from one another but indistinguishable to human peripheral vision in both spatial and motion perception. We present the first technique to synthesize motion metamers for AR/VR; all in real-time and completely unsupervised (no high-quality reference required). SIGGRAPH 2024 |

|

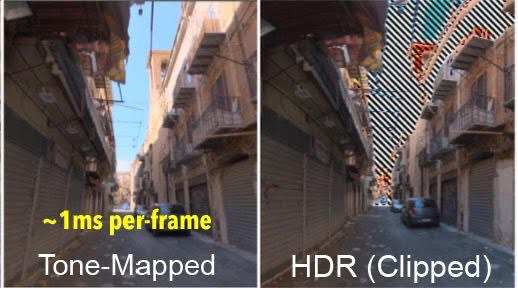

An ultra-fast (under 1ms per-frame on standalone VR) framework that adaptively maintains the perceptual appearence of HDR content after tone-mapping. The framework relates human contrast perception across very different lumainances scales, and then optimizes any tone-mapping curve to minimize perceptual difference. SIGGRAPH Asia 2023 |

|

The fastest (200FPS at 4K) and first no-reference spatial metamers of human peripheral vision that we know of; specifically tailored for direct integration into the real-time VR foveated rendering pipeline. Save upto 40% (rendering time) over tranditional foveated rendering, without visible loss in quality. SIGGRAPH 2022 |

|

|

An investigation into why the representations learned by image recognition CNNs work remarkably well as features of perceptual quality (e.g perceptual loss). We theorize that these image classification representations learn to be spectrally sensitive to the same spatial frequencies which the human visual system is most sensitive to, so they can effectively encode perceptually visible distortions. ECCV 2020 |

|

|

A human contrast perception inspired spatial attention mask that makes the deep learning pipeline aware of perceptually important visual information in images. ICCV 2019 - Learning for Computational Imaging Workshop |

|

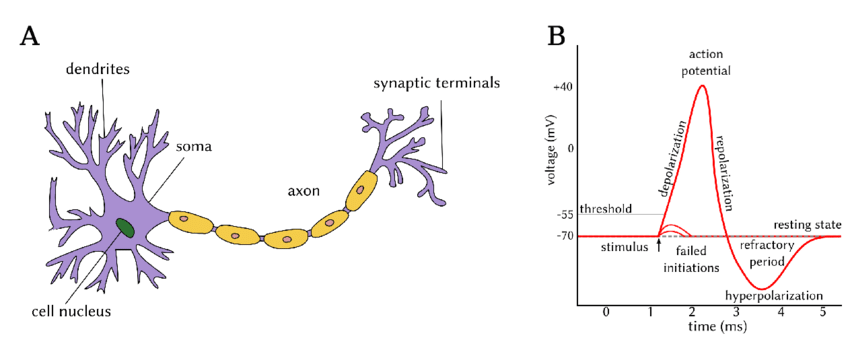

A signal processing pipeline for unsupervised sorting of brain signals on impalntable neural chips, primarily for neuro-prosthetics. Computer Methods and Programs in Biomedicine, 2019 |

|

A new non-linear signal processing filter for detecting noisy brain action potentials. IEEE Engineering in Medicine and Biology Conference (EMBC), 2017 |